Today I want to repost for my readers a really interesting article by Gionatan Danti first posted on his blog http://www.ilsistemista.net/, I hope you enjoy it as much as I do

File compression is an old trick: one of the first (if not the first) program capable of compressing files was “SQ”, in the early 1980s, but the first widespread, mass-know compressor probably was ZIP (released in 1989).

In other word, compressing a file to save space is nothing new and, while current TB-sized, low costs disks provide plenty of space, sometime compression is desirable because it not only reduces the space needed to store data, but it can even increase I/O performance due to the lower amount of bits to be written or read to/from the storage subsystem. This is especially true when comparing the ever-increasing CPU speed to the more-or-less stagnant mechanical disk performance (SSDs are another matter, of course).

While compression algorithms and programs varies, basically we can distinguish to main categories: generic lossless compressors and specialized, lossy compressors.

If the last categories include compressors with quite spectacular compression factor, they can typically be used only when you want to preserve the general information as a whole, and you are not interested in a true bit-wise precise representation of the original data. In other word, you can use a lossy compressor for storing an high-resolution photo or a song, but not for storing a compressed executable on your disk (executable need to be perfectly stored, bit per bit) or text log files (we don’t want to lose information on text files, right?).

So, for the general use case, lossless compressors are the way to go. But what compressor to use from the many available? Sometime different programs use the same underlying algorithm or even the same library implementation, so using one or another is a relatively low-important choice. However, when comparing compressors using different compression algorithms, the choice must be a weighted one: you want to privilege high compression ratio or speed? In other word, you need a fast and low-compression algorithm or a slow but more effective one?

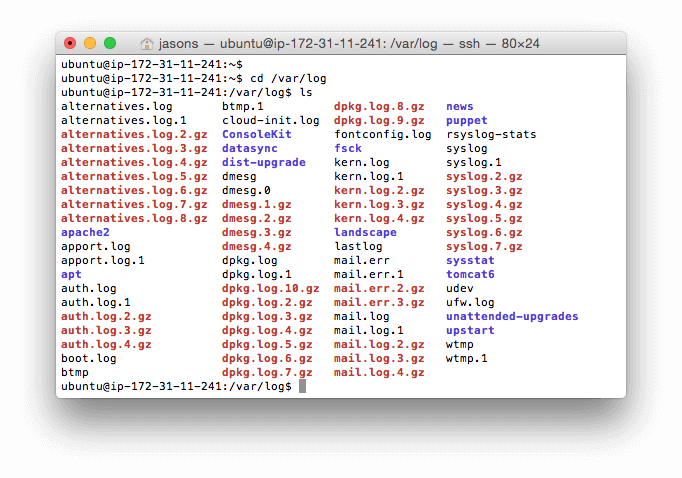

In this article, we are going to examine many different compressors based on few different compressing libraries:

- lz4, a new, high speed compression program and algorithm

- lzop, based on the fast lzo library, implementing the LZO algorithm

- gzip and pigz (multithreaded gzip), based on the zip library which implements the ZIP alg

- bzip2 and pbzip2 (multithreaded bzip2), based on the libbzip2 library implementing the Burrows–Wheeler compressing scheme

- 7-zip, based mainly (but not only) on the LZMA algorithm

- xz, another LZMA-based program

Continue reading »