Logging is a critical thing for all system administrators, if you log too much and you don’t manage the files you could fill up a partition or even worst stop some service, if you don’t log enough you’ll lose information when something goes wrong, in general a good solution for this is to send all the logs to a central server that will store for the time you need them, and keep just 1,2 days of log into the local machine.

You could do this configuration easily with rsyslog or syslog-ng to send/receive the logs and logrotate to rotate the files locally on your machines, today I want to show you some open source programs that can receive the logs, store them on filesystem or database and analyse them presenting the results via Web dashboards.

These are large applications most suited for big company, or in general to everyone that want to keep and manage a lot of data they are: Apache Flume, Logstash, Greylog2 and Scribe

Apache Flume

Flume is a distributed, reliable, and available service for efficiently collecting, aggregating, and moving large amounts of log data. It has a simple and flexible architecture based on streaming data flows. It is robust and fault tolerant with tunable reliability mechanisms and many failover and recovery mechanisms. It uses a simple extensible data model that allows for online analytic application.

Its main goal is to deliver data from applications to Apache Hadoop’s HDFS, and is a top level project at the Apache Software Foundation.

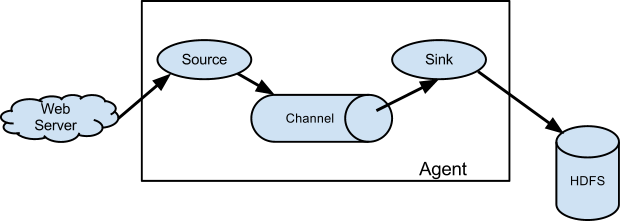

A Flume event is defined as a unit of data flow having a byte payload and an optional set of string attributes. A Flume agent is a (JVM) process that hosts the components through which events flow from an external source to the next destination (hop).

A Flume source consumes events delivered to it by an external source like a web server. The external source sends events to Flume in a format that is recognized by the target Flume source. For example, an Avro Flume source can be used to receive Avro events from Avro clients or other Flume agents in the flow that send events from an Avro sink. When a Flume source receives an event, it stores it into one or more channels. The channel is a passive store that keeps the event until it’s consumed by a Flume sink. The JDBC channel is one example – it uses a filesystem backed embedded database. The sink removes the event from the channel and puts it into an external repository like HDFS (via Flume HDFS sink) or forwards it to the Flume source of the next Flume agent (next hop) in the flow. The source and sink within the given agent run asynchronously with the events staged in the channel.

Logstash

Logstash is a tool for managing events and logs. You can use it to collect logs, parse them, and store them for later use (like, for searching). Speaking of searching, logstash comes with a web interface for searching and drilling into all of your logs.

It is fully free and fully open source using the Apache 2.0 license.

Logstash is written in JRuby but I release standalone jar files for easy deployment, so you don’t need to download JRuby or most any other dependencies, as alterantive is possible to install it as ruby gem.

The life of an event in Logstash

The logstash agent is an event pipeline.

The logstash agent is 3 parts: inputs -> filters -> outputs. Inputs generate events, filters modify them, outputs ship them elsewhere.

Internal to logstash, events are passed from each phase using internal queues. It is implemented with a ‘SizedQueue’ in Ruby. SizedQueue allows a bounded maximum of items in the queue such that any writes to the queue will block if the queue is full at maximum capacity.

Logstash sets each queue size to 20. This means only 20 events can be pending into the next phase – this helps reduce any data loss and in general avoids logstash trying to act as a data storage system. These internal queues are not for storing messages long-term.

If an output is failing, the output thread will wait until this output is healthy again and able to successfully send the message. Therefore, the output queue will stop being read from by this output and will eventually fill up with events and cause write blocks.

For a great introduction, and much more on logstash i suggest to take a look at this video:

Greylog2

Graylog2 is an open source log management solution that stores your logs in ElasticSearch. It consists of a server written in Java that accepts your syslog messages via TCP, UDP or AMQP and stores it in the database. The second part is a web interface that allows you to manage the log messages from your web browser.

Graylog2 is free and open source. It is licensed under the GNU General Public License v3 (GPLv3) and all source code can be browsed on GitHub.

The web interface

All data sent to Graylog2 will appear in the web interface. Use the web interface to search and filter your data. A core part of the web interface are streams: They basically are saved searches that allow you to quickly access an overview that is already pre-filtered to match for example specific parts of your application. You can also run monitoring and alerting on single streams or directly forward all messages that are matched into a stream to other endpoints.

The Graylog2 server accepts standard syslog via TCP/UDP and GELF via UDP. You can also send in both formats via AMQP. You can configure your syslog daemons to send their data to Graylog2 or log directly from within your applications.

Logstash and logix are very useful to forward or send logs to a Graylog2 instance.

Scribe

Scribe is a server for aggregating streaming log data. It is designed to scale to a very large number of nodes and be robust to network and node failures. There is a scribe server running on every node in the system, configured to aggregate messages and send them to a central scribe server (or servers) in larger groups. If the central scribe server isn’t available the local scribe server writes the messages to a file on local disk and sends them when the central server recovers. The central scribe server(s) can write the messages to the files that are their final destination, typically on an nfs filer or a distributed filesystem, or send them to another layer of scribe servers.

Scribe is unique in that clients log entries consisting of two strings, a category and a message. The category is a high level description of the intended destination of the message and can have a specific configuration in the scribe server, which allows data stores to be moved by changing the scribe configuration instead of client code. The server also allows for configurations based on category prefix, and a default configuration that can insert the category name in the file path. Flexibility and extensibility is provided through the “store” abstraction. Stores are loaded dynamically based on a configuration file, and can be changed at runtime without stopping the server. Stores are implemented as a class hierarchy, and stores can contain other stores. This allows a user to chain features together in different orders and combinations by changing only the configuration.

Popular Posts:

- None Found