Or zip VS gzip VS bzip2 VS xz

In a previous article about the tar program I mentioned gzip and bzip2 compression methods as options to create a tarball (and I forgot xz).

To make amends today I will introduce the main methods to compress the file and I’ll do some tests to see how they behave.

I will consider zip, gzip, bzip2 and xv, i will not test compress another compression program present on Linux systems but now dated and surpassed by the other programs.

But as first thing an overview of these 4 methods/programs of compression

Zip

The ZIP file format is a data compression and archive format. A ZIP file contains one or more files that have been compressed to reduce file size, or stored as-is. The ZIP file format permits a number of compression algorithms.

The format was originally created in 1989 by Phil Katz, and was first implemented in PKWARE’s PKZIP utility,[1] as a replacement for the previous ARC compression format by Thom Henderson. The ZIP format is now supported by many software utilities other than PKZIP (see List of file archivers). Microsoft has included built-in ZIP support (under the name “compressed folders”) in versions of its Windows operating system since 1998. Apple has included built-in ZIP support in Mac OS X 10.3 and later, including other compression formats.

Gzip

GNU Gzip is a popular data compression program originally written by Jean-loup Gailly for the GNU project. Mark Adler wrote the decompression part.

gzip is any of several software applications used for file compression and decompression. The term usually refers to the GNU Project’s implementation of such a tool using Lempel-Ziv coding (LZ77), for which it stands for GNU zip. The program was created by Jean-Loup Gailly and Mark Adler as a free software replacement for the compress program used in early Unix systems, and intended for use by the Project. Version 0.1 was first publicly released on October 31, 1992, and version 1.0 followed in February 1993.

gzip is based on the DEFLATE algorithm, which is a combination of LZ77 and Huffman coding. DEFLATE was intended as a replacement for LZW and other patent-encumbered data compression algorithms which, at the time, limited the usability of compress and other popular archivers.

bzip2

bzip2 is a free and open source lossless data compression algorithm and program developed by Julian Seward. Seward made the first public release of bzip2, version 0.15, in July 1996. The compressor’s stability and popularity grew over the next several years, and Seward released version 1.0 in late 2000.

bzip2 compresses most files more effectively than the older LZW (.Z) and Deflate (.zip and .gz) compression algorithms, but is considerably slower. LZMA is generally more efficient than bzip2, while having much faster decompression.

bzip2 compresses data in blocks of size between 100 and 900 kB and uses the Burrows–Wheeler transform to convert frequently-recurring character sequences into strings of identical letters. It then applies move-to-front transform and Huffman coding. bzip2’s ancestor bzip used arithmetic coding instead of Huffman. The change was made because of a software patent restriction.

bzip2 is asymmetric, as decompression is relatively fast. Motivated by the large CPU time required for compression, a modified version was created in 2003 called pbzip2 that supported multi-threading, giving almost linear speed improvements on multi-CPU and multi-core computers

XZ Utils

XZ Utils is free general-purpose data compression software with high compression ratio. XZ Utils were written for POSIX-like systems, but also work on some not-so-POSIX systems. XZ Utils are the successor to LZMA Utils.

The core of the XZ Utils compression code is based on LZMA SDK, but it has been modified quite a lot to be suitable for XZ Utils. The primary compression algorithm is currently LZMA2, which is used inside the .xz container format. With typical files, XZ Utils create 30 % smaller output than gzip and 15 % smaller output than bzip2.

XZ Utils consist of several components:

* liblzma is a compression library with API similar to that of zlib.

* xz is a command line tool with syntax similar to that of gzip.

* xzdec is a decompression-only tool smaller than the full-featured xz tool.

* A set of shell scripts (xzgrep, xzdiff, etc.) have been adapted from gzip to ease viewing, grepping, and comparing compressed files.

* Emulation of command line tools of LZMA Utils eases transition from LZMA Utils to XZ Utils.

Benchmark

I always used the basic commands without adding options, and then gave as name of the file to test “filename” I used:

zip nomefile.zip nomefile

gzip nomefile

bzip2 nomefile

xz nomefile

Text

Test made with the text file Bible in Basic English (bbe) the file not compressed is 4467663 bytes (4 MB).

These are the results:

4467663 bbe

1282842 bbe.zip

1282708 bbe.gz

938552 bbe.xz

879807 bbe.bz2

With a bigger text file 41 MB the results are:

41M big2.txt

15M big2.txt.zip

15M big2.txt.gz

11M big2.txt.bz2

3.6M big2.txt.xz

Another test with standard options and text file of 64 MB, results:

64M 65427K big3.txt

26M 25763K big3.txt.zip

26M 25763K big3.txt.gz

24M 23816K big3.txt.bz2

2.2M 2237K big3.txt.xz

Further test, as suggested in the comments i’ve used the -9 flag (max compression), with these commands:

zip -9 big3.txt.zip big3.txt

gzip -9 -c big3.txt >> big3.txt.gz

bzip2 -9 -c big3.txt >> big3.txt.bz2

xz -e -9 -c big3.txt >> big3.txt.xz

And the results, size and type of file:

64M 65427K big3.txt

25M 25590K big3.txt.zip

25M 25590K big3.txt.gz

24M 23816K big3.txt.bz2

2.2M 2218K big3.txt.xz

Avi

I used a 700 MB file encoded in XviD, the compression results were (as expected) quite low:

700 MB file.avi

694 MB file.avi.zip

694 MB file.avi.gz

694 MB file.avi.xz

693 MB file.avi.bz2

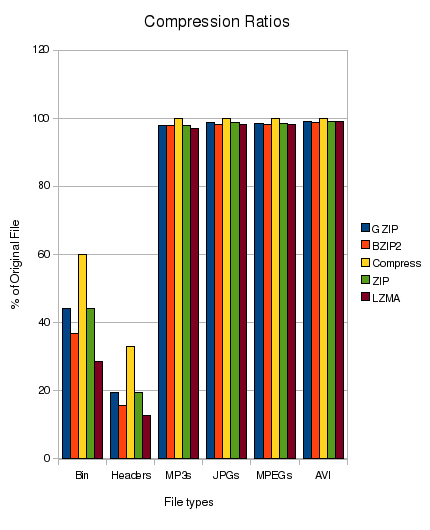

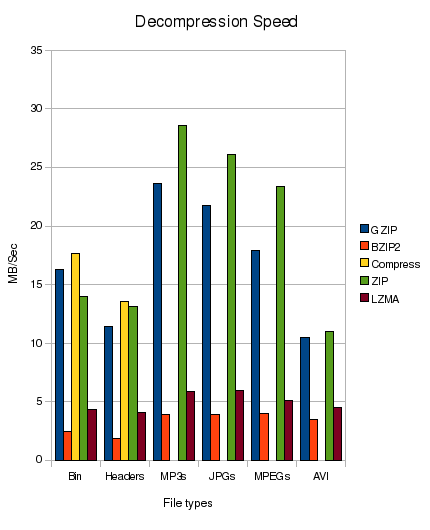

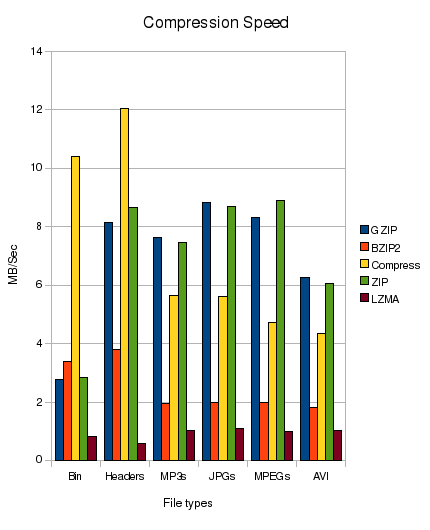

On another site, I found very similar test that i includes for completeness, the tests were made with:

- Binaries 6,092,800 Bytes taken from my /usr/bin director

- Text Files 43,100,160 Bytes taken from kernel source at /usr/src/linux-headers-2.6.28-15

- MP3s 191,283,200 Bytes, a random selection of MP3s

- JPEGs 266803200 Bytes, a random selection of JPEG photos

- MPEG 432,240,640 Bytes, a random MPEG encoded video

- AVI 734,627,840 Bytes, a random AVI encoded video

I have tarred each category so that each test is only performed on one file (As far as I’m aware, tarring the files will not affect the compression tests). Each test has been run from a script 10 times and an average has been taken to make the results as fair as possible.

Conclusions

From empirical tests done, and those observed at other sites I would recommend LZMA (aka XZ), is a bit slower and consumes more CPU cycles, but also it has a bigger compression ratios on the text file, which typically are those who we are interested to compress.

Add that’s fully integrated with tar, (option J) and is easily integrable with logrotate

On the other hand if CPU or Time are an issue you can go with gzip that is also more used and know (if you have to send somewhere your archive).

Popular Posts:

- None Found

I would like to thank you for you most interesting blog.

Occasionally, I try to read the Italian one…

Tanti auguri.

for bzip2, gzip and xz, you need to do the tests again with the -9 or –best option enabled. Since you seem to only be concerned about the compression ratio, and not the time it takes, it is only fair to use the functions internal to the compressors that “they” deem best.

Also, tar’s option j is for bzip2 on my ubuntu 10.04LTS system. What system are you using?

Finally, you have typo’d xz as xv in the second paragraph.

Thanks for the feedback.

Typo corrected, the -j (lowercase) is for bzip2 and -J (uppercase) is for xz, at least this work on Ubuntu 10.10.

I’m redoing now the test on txt files with your suggested options and i’ll publish the results later.

Thanks

An interesting write up, unfortunately the graphs lack context and meaning could you clarify a few points:

Are the speeds in compressed or uncompressed data?

Do the times include iowait/disk access?

Which average was taken (mean)?

What kind of error is there in the measurements (e.g the compression ratios for media all look like they would be the same if error bars were added)?

Hello,

Lot of technical question, but i dont’ have the answers :), the graph are taken from another article http://blog.terzza.com/linux-compression-comparison-gzip-vs-bzip2-vs-lzma-vs-zip-vs-compress/ try check with the author.

If you like these benchmarks check also: http://tukaani.org/lzma/benchmarks.html

What about 7z ?

I don’t use 7z because usualyl you don’t find it in the standard packages installed by most distro and more important is not integrated with tar.

Told that, i must say that 7z (in my test) gave the higher compression ratio, i used with the text file of 64MB the command:

7zr a -t7z -m0=lzma -mx=9 -mfb=64 -md=32m -ms=on big3.txt.7z big3.txt

And i got 2.2M big3.txt.7z that is the same result of XZ.

Still, due to integration with tar, i’d preferer XZ as standard compression tool.

Thanks for the tips.

Nice comparison, but it would have been a bit better if the same file was used for default compression and -9 compression. This would have made the results comparable.

Big2.txt was generated randomly and i deleted it at the end of the test, so i was not able to do the same test on it.

But i’m including now in the article the results of standard compression (no option) for the big3.txt file.

No RAR?

I don’t use rar for the same reason of 7zip, no integration with tar, but i’ve doen a test and on the text file of 64 MB i got this result: 21579K big3.txt.rar

That’s better than gzip and bzip2, so if you are used to rar it’s quiet good on compression, but still not as good as xz and 7z

Bye

The AV file formats, .mp3, .jpg, .etc are compressed by their coding algorithm. For meaningful results, check compression of raw images or sound files. Then compare the “compressed” versions with the encoded versions for size. I predict that the compression ratios will be similar. However, not all the AV codings are “lossless” (reversible).

“..no integration with tar..”

please, run that:

tar –help | grep [-]I

#tar –help | grep [-]I

-I, –use-compress-program=PROG

Ok, but still too long for the lazy 😛