After some studies, or perhaps a specialist course or presentation you’d like to start to implement in your company the best practice you have learnt, and perhaps start a new and better era for your IT department.

After some studies, or perhaps a specialist course or presentation you’d like to start to implement in your company the best practice you have learnt, and perhaps start a new and better era for your IT department.

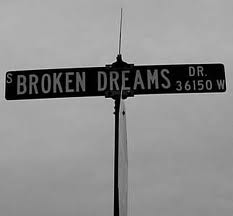

But it seem that something always go in the wrong way or there are unexpected difficulties that make all your plans, and dreams, fails; and after some fight you usually end saying “ok that WAS the best practice and we are sure to don’t follow it”.

This is my list of things I’ve found impossible to realize in some years of work.

Proper planning for projects.

Before starting anything, I’d like to know the main goal of a projects, the customer requisites, the budget, for how much time the project must be online or work and how many people can work on it. After knowing all these information do a project with all the needed software and hardware and calculate the timing to complete all phases.

With a proper planning all become easier and you can install and maintain many systems and services online without too much trouble.

What happen to me is usually:

“We got a new customer, he want in 2 weeks his new portal ready, he has a lot of access to his website that must be always online so build something with high-availability !”

“We got a new customer, we must end in 2 weeks his mega project XX, but it’s only a couple of Linux machine with YYY (put an unknown distro here) that use this software (put an unknown software here) with this DB (put an unknown DB here).”

“We got a new customer, he want his e-commerce site, in load balance between 4 machines, everything replicated and maintained by us, he ask a 99.9999% uptime, the budget for the project it’s YYY (put an excessively low-budget here).”

Security

Security it’s an important thing in every company, you can have good services, but your customers would be happy knowing that all their information have been stolen (Sony ?).

Security it’s not a thing you can do in your spare time or 1 time every 6 months, it’s a set of best practices, rules, configurations that must be followed every day; sadly until it’s too late no one seem interested in security.

For example you could have a standard set of rules to make account on machines and set up firewalls on every Linux machine and a standard set of configuration; and this is a good starting point, but this become pointless if you receive requests like:

“Add an account on the DB server for John Smith, it’s a new consultant that must be able to start/stop and change the DB files.”

“Open these ports on the firewall of the machine XX (or stop the firewall), the development team must do something and the firewall it’s stopping them.”

“Don’t update the software on these servers, the development team is not sure that with the new version of libXX their software work.”

And this one bring on a new point:

Updates

My motto could be “better updated than sorry”, on my desktops i update the OS the day it become stable and on servers I’d like to have a planned monthly day for updates, and be ready to do some extra update if some big security bug comes out.

Having an updated system means that you are safe from know security bug, code bug and you have all the latest features of that software, naturally if you have used a software you should know what means that “function XX now it’s called XX_h”, and know if your software could be affected or not.

If in doubt use a test server to apply the updates and if all is fine update the production server.

What happen many times, is that a lot of managers use the motto “Until it works don’t touch it”, so I’ve seen installation of Linux distributions made by the original CD and never updated, “the service it’s up why change something” ?

Or worst, many people who don’t understand fully the open source now use open CMS (Drupal, Joomla, WP) because they are great and are free, for them the magical word it’s free of license, what these people don’t understand is that you cannot keep an open source CMS of 2 years ago online, with everyone knowing of his security bugs and hope that it will work forever, I’ve seen at least a dozen of sites hacked with know CMS bugs.

Or the worst thing it’s when i ask to developers if i can upgrade XX (put a perl module, php, or a JVM), and usually their answer it’s “We certify only version FF, the one that we are using from 1 year ago” and so i cannot update anything…

uniform environment

To make the things easy the best solution would be: to have 1 distribution at the same level on all servers, use only 1 application stack to deliver services (java, ruby, php).

I can understand this can become too strict, over time new release of the distribution come out and it’s not so easy to upgrade all server, or different group can use different software.

What i really don’t understand it’s the use of many different solutions in the same data center, such as: Red Hat for the Jboss machines, Debian for the Lamp servers, CentOS for the development machine and Suse for the DB; and when you ask why ? Sometime the answer is “the former sys admin liked that Distro, or the consultant told us to use that distro” that for me have no sense at all, having multiple distro means that your system administrator must have much more knowledge.

And the same thing apply to the software stack for the service, recently I’ve seen a service that use Tomcat with a Java application for a section of the site, Apache and perl for the other and php to run the scheduled tasks via crontab. A madness in my eyes…

Conclusions

I love be a system administrator and in my dreams there is a logic behind all the machine and services of which I’m responsible , but like many dreams this clash with the reality..

Popular Posts:

- None Found

This is why I got out of IT. I was also tired of having to repeatedly explain why I didn’t arrive back at work before noon after being up past midnight working on the network. For some reason it didn’t seem logical to management to take the network offline after the majority of computer users went home. I guess they may have believed that most of the users were lazy so having their systems unusable for a few hours didn’t affect productivity.

You should check out the IT horror stories at the Computerworld Shark Tank. Get yourself a free T-shirt for sharing one. I did.

i am a developer ans system administrator, so i can understand both sides.

the job of a sysadmin is to serve the users, because if you don’t serve them they will work around you and your job will be much worse.

on your security points for example, i’d go find out why these requests are made. what is it that the db guy really needs to do? what are the developers trying to do? maybe they need to be put in a seperate subnet to protect the rest of the network while the developers are more exposed.

as for the update of software, that’s sometimes an outside problem, sometimes it is just an issue of timing. not sure how it can be helped other than strengthening outside protection, maybe having policies that require developers machines to be uptodate, keep developers alerted about upcoming upgrades so they can prepare for it. i’d maybe also make the security team part of the development team and have them involved in decisions about which versions are best for supporting.

as for the uniform environment, i simply disagree with that one.

one of my clients had standardized on fedora. sure, not the best choice, but it worked.

then asterisk came along.

asterisk is best supported on centos. do you think i put it on a fedora machine? no way. that would have meant extra work for me for something that is meant to be extra stable.

people can deal with a hickup on a desktop. but have the phones go down and they cry bloody murder.

yes, tracking multiple distributions is more work if they all do the same job, but i found that some distributions are not suitable for some things. for servers i want something really stable, debian stable, centos, etc…

but on desktops people want newer software, so we need something like ubuntu, fedora. or even foresight.

developers are a mixed bag, on the one hand the need new versions of tools, and on the other they may need to deploy on stable servers. the best advice i have seen here recommends that every developer have a different system, that way ensuring that the product being developed runs on the widest selection of different systems (that includes mac os, windows and a few different linux distributions, maybe even bsd or solaris.)

also, a homogeneous environment is easier to attack. if the attacker found a way itno one machine, then all machines are accessible, whereas in a heterogeneous system such attacks are less easy to exploit.

greetings, eMBee.

Thanks for the long answer eMBee, i’m sure there are both side of the medals, and for the developers a sys admin with his weird idea could be a nightmare.

And for distro, desktop and server should follow different paths IMO, just keep the same package manager if possible so Red hat/CentOS on server and fedora on Desktop, or Debian on server and Ubuntu on Desktop…

Forse e’ il caso che tu segua anche qualche corso per imparare l’italiano!

Be di sicuro non mi farebbe male, avendo fatto solo studi tecnici 😉

Rilancio con un ripasso generale dell’inglese dove faccio degli orrori 🙂

Having multiple Linux distros never bothered me. Starting with Slackware seems to have helped with those kind of experiences. I’d love to have that situation over the multiple versions of windows and office that I’m stuck with.

The upgrade hate also bugs me. Recently I was shown an ubuntu machine that served as a webserver and they want me to secure it… But it was so f**** old the support ended (not LTS even), so no repos no nothing.